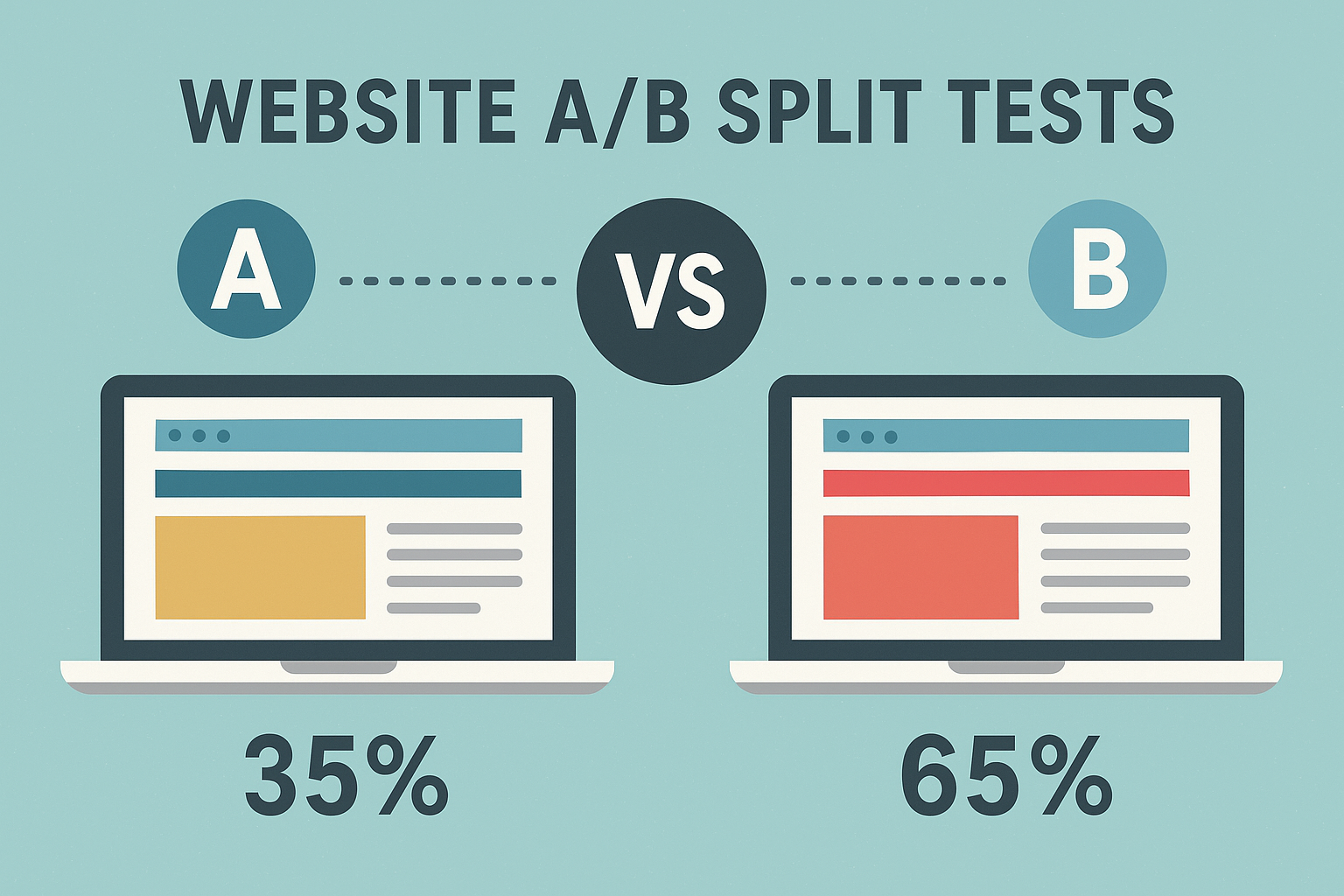

The difference between a good marketing campaign and a great one often comes down to testing. A/B split testing, also known as split testing or bucket testing, is the practice of comparing two versions of a webpage, email, or other marketing asset to determine which performs better.

When executed correctly, A/B testing can dramatically increase your conversion rates, improve user experience, and maximize your return on investment.

However, many marketers approach A/B testing haphazardly, testing random elements without a strategic framework. This shotgun approach rarely yields meaningful results and can even lead to misguided decisions based on statistical noise rather than genuine insights.

The key to conversion-boosting A/B tests lies in following a systematic, data-driven methodology that ensures your tests are valid, actionable, and impactful.

In this comprehensive guide, we’ll walk through ten essential steps that will transform your A/B testing program from guesswork into a powerful conversion optimization engine. Whether you’re new to split testing or looking to refine your existing process, these steps will help you design, execute, and analyze tests that drive real business results.

Step 1: Establish Clear, Measurable Goals

Before you change a single pixel on your website or alter a word of copy, you need to define exactly what success looks like. This might seem obvious, but it’s surprising how many marketers launch A/B tests without clearly articulated goals, leading to confusion when it’s time to interpret results.

Start by identifying your primary conversion goal. This should be a specific, measurable action that directly contributes to your business objectives. Common conversion goals include:

- Email sign-ups for a newsletter

- Product purchases or completed transactions

- Free trial registrations

- Contact form submissions

- Content downloads like ebooks or whitepapers

- Account creations

- Video views or engagement metrics

Your primary goal should be singular and unambiguous. While you can track secondary metrics, having one clear north star ensures you can make definitive decisions about which variation wins.

Define your success metrics quantitatively. Instead of saying “increase conversions,” specify “increase email sign-up conversion rate from 3% to 4%.” This precision helps you calculate the sample size needed for statistical significance and gives you a concrete benchmark for success.

Consider the full conversion funnel. Sometimes optimizing for one metric can negatively impact another. For example, making a form shorter might increase submissions but decrease lead quality. Decide upfront which metrics matter most and how you’ll balance trade-offs if they arise.

Document your hypothesis. Write down what you expect to happen and why. For example: “Changing the call-to-action button from ‘Submit’ to ‘Get My Free Guide’ will increase conversions by 15% because it emphasizes the value proposition and reduces friction.” This hypothesis will guide your test design and help you learn from the results regardless of the outcome.

Step 2: Conduct Thorough Research and Analysis

Effective A/B testing isn’t about randomly trying different colors or button placements. It’s about making informed decisions based on data, user research, and proven conversion optimization principles. Before you design your test variations, invest time in understanding your current performance and identifying opportunities.

Analyze your existing data. Dive into your analytics platform to understand how users currently interact with your page. Look for patterns such as:

- High bounce rates indicating potential relevance or loading issues

- Drop-off points in your conversion funnel

- Traffic sources that convert better or worse than average

- Device or browser-specific performance issues

- Time-on-page metrics that suggest confusion or engagement

Gather qualitative insights. Numbers tell you what is happening, but qualitative research tells you why. Use multiple research methods:

- User testing sessions where you watch real people interact with your page

- Heatmaps and scroll maps showing where users click and how far they scroll

- Session recordings revealing friction points and user confusion

- Customer surveys asking about obstacles to conversion

- Customer support tickets highlighting common questions or complaints

- Social media feedback and review analysis

Study your competition and industry benchmarks. See what high-converting companies in your space are doing. While you shouldn’t blindly copy competitors, understanding industry standards helps you identify potential improvements. Look at their value propositions, page layouts, trust signals, and calls to action.

Review conversion optimization best practices. Familiarize yourself with established principles like:

- The fold myth and how users actually scroll

- Visual hierarchy and eye-tracking patterns

- The psychology of color and contrast

- Effective copywriting techniques

- Form optimization strategies

- Trust and credibility signals

This research phase should reveal specific, data-backed hypotheses about what might be holding back your conversions. These insights become the foundation for high-impact tests.

Step 3: Prioritize Your Testing Ideas

By this point, you probably have more testing ideas than you can possibly execute. Since A/B tests take time to reach statistical significance, you need to prioritize ruthlessly, focusing on tests that are most likely to produce meaningful improvements.

Use a prioritization framework to objectively evaluate your testing ideas. The ICE framework is particularly popular and effective:

- Impact: How big an effect will this test have if it succeeds? (Score 1-10)

- Confidence: How confident are you that this test will succeed? (Score 1-10)

- Ease: How easy is this test to implement? (Score 1-10)

Calculate an ICE score by averaging or multiplying these three factors. Focus your efforts on ideas with the highest scores.

Consider testing high-traffic pages first. A test on a page with 100,000 monthly visitors will reach statistical significance much faster than one on a page with 1,000 visitors. High-traffic tests also have greater absolute impact on your business metrics.

Focus on elements close to conversion. Generally, the closer an element is to the final conversion action, the more impact changes will have. Testing your checkout page button will typically have more impact than testing your homepage navigation.

Start with high-impact, fundamental elements before moving to minor details. Test your value proposition, headlines, and primary calls to action before worrying about button shadows or footer text. The 80/20 rule applies: 20% of your page elements likely drive 80% of your results.

Balance quick wins with long-term learning. While you want some tests that can deliver fast results, also invest in more complex tests that help you understand your audience better and build conversion optimization knowledge.

Step 4: Design Your Test Variations Thoughtfully

Now comes the creative phase where you design the alternative version you’ll test against your original. This step requires both art and science, as you need variations that are different enough to produce meaningful results but still aligned with your brand and business goals.

Make one significant change at a time for most tests. While there are advanced scenarios where multivariate testing makes sense, standard A/B tests should isolate a single variable or closely related group of changes. This allows you to understand exactly what drove any performance differences.

Examples of focused test variations include:

- Headlines: Test different value propositions, lengths, or emotional appeals

- Call-to-action buttons: Test copy, color, size, or placement

- Images: Test hero images, product photos, or whether images help or hurt

- Form fields: Test the number of fields, field labels, or form layout

- Social proof: Test testimonials, customer logos, or trust badges

- Copy length: Test long-form versus short-form content

- Pricing display: Test different ways to present prices or value

Ensure your variations are substantially different. Tiny changes like slightly different shades of the same color rarely produce detectable differences in conversion rates. Make bold changes that test meaningfully different approaches.

Maintain design consistency and quality. Your test variation should look professional and polished. A poorly designed variation might lose not because the concept is wrong, but because the execution is lacking. Both versions should represent quality user experiences.

Consider the context of your change. A variation shouldn’t exist in isolation. If you’re testing a more urgent headline, perhaps the call-to-action button should also reflect that urgency. The elements should work together coherently.

Document your variations thoroughly with screenshots and descriptions. This documentation becomes valuable for future reference, helping you avoid repeating tests and building institutional knowledge.

Step 5: Calculate Required Sample Size and Test Duration

One of the most common A/B testing mistakes is ending tests too early, before reaching statistical significance. This leads to false positives where you declare a winner based on random chance rather than real performance differences.

Determine your minimum sample size before launching your test. This calculation depends on several factors:

- Your current conversion rate

- The minimum improvement you want to detect (minimum detectable effect)

- Your desired confidence level (typically 95%)

- Your desired statistical power (typically 80%)

Many online sample size calculators can help you determine how many conversions you need in each variation to reach statistical significance. For example, if your current conversion rate is 2% and you want to detect a 20% relative improvement (to 2.4%), you might need around 20,000 visitors per variation.

Estimate your test duration based on your traffic levels. If your page receives 5,000 visitors per week and you need 20,000 visitors per variation (40,000 total), your test will need to run for approximately eight weeks. Planning this upfront helps set realistic expectations.

Account for traffic variability. Conversion rates often vary by day of week, time of month, or season. Your test should run long enough to capture these natural fluctuations. As a rule of thumb, run tests for at least one full business cycle (typically one to two weeks minimum) even if you reach your sample size sooner.

Resist the temptation to peek and decide early. Checking your results multiple times and stopping when you see a winner increases the false positive rate. Commit to your calculated sample size and duration upfront, or use sequential testing methods specifically designed for ongoing monitoring.

Consider using statistical significance calculators that account for the frequency of result checking, or commit to only analyzing results at predetermined intervals rather than continuously monitoring.

Step 6: Implement Your Test Correctly

Even the best-designed test is worthless if it’s implemented incorrectly. Technical implementation errors can invalidate your results, leading to decisions based on faulty data.

Choose the right testing platform for your needs. Popular options include:

- Google Optimize (free, integrates with Google Analytics)

- Optimizely (enterprise-level features)

- VWO (Visual Website Optimizer)

- Adobe Target (for Adobe ecosystem users)

- Convert (privacy-focused option)

- Unbounce (for landing pages specifically)

Each platform has different features, pricing, and complexity levels. Choose one that matches your technical skills and testing requirements.

Implement your testing code properly. Most A/B testing tools work by using JavaScript to modify your page content after it loads. Key implementation considerations include:

- Place the testing snippet in your page header to minimize flickering

- Ensure the code loads synchronously to avoid race conditions

- Test in multiple browsers and devices to confirm consistent behavior

- Verify that variations load correctly for all user segments

Set up proper tracking and goal configuration. Your testing platform needs to know what constitutes a conversion. Configure your goals carefully:

- Ensure conversion tracking fires correctly for all variations

- Set up any micro-conversions or secondary metrics you want to monitor

- Integrate with your analytics platform for deeper analysis

- Test the tracking manually before launching to real users

Split traffic evenly between variations unless you have a specific reason not to. A 50/50 split provides the fastest path to statistical significance. If you’re risk-averse, you can use a 90/10 or 80/20 split, but understand this will extend your test duration significantly.

Use proper randomization. Your testing platform should randomly assign users to variations based on a consistent identifier (usually a cookie). Ensure that users see the same variation consistently across multiple visits to avoid confusion.

Step 7: Monitor Test Performance and Watch for Issues

Once your test is live, your job isn’t done. Active monitoring helps you catch technical issues early and ensures your test is running smoothly.

Conduct a quality assurance check within the first few hours of launching. Verify that:

- Both variations are displaying correctly

- Traffic is splitting as expected

- Conversions are being tracked properly

- No JavaScript errors are occurring

- The test works across different browsers and devices

- Mobile experience is acceptable

Monitor for anomalies that might invalidate your test. Watch for:

- Sudden traffic spikes or drops that might indicate technical issues

- Conversion rate changes that seem unrealistic

- One variation receiving significantly more or less traffic than expected

- Unusually high bounce rates suggesting page load issues

- External factors like site-wide promotions or outages affecting results

Check for segment-level differences. While you shouldn’t make premature conclusions, monitoring how different user segments respond can provide valuable insights. Look at performance across:

- Traffic sources (organic, paid, social, email)

- Device types (desktop, mobile, tablet)

- New versus returning visitors

- Geographic regions

- Time of day or day of week

Document any incidents or unusual events that occur during your test period. If your site experiences downtime, you run a major promotion, or significant news about your company or industry breaks, note these events. They may impact your results or require you to extend the test duration.

Resist the urge to make changes mid-test. Once your test is live, changing anything about either variation or the test setup invalidates your results. If you discover a critical issue, it’s better to stop the test, fix the problem, and restart than to continue with compromised data.

Step 8: Analyze Results with Statistical Rigor

When your test reaches the predetermined sample size and duration, it’s time for analysis. This step requires careful statistical thinking to avoid common pitfalls that lead to false conclusions.

Wait for statistical significance before declaring a winner. Statistical significance (typically set at 95% confidence) means there’s only a 5% chance your results are due to random variation rather than a real difference. Most testing platforms calculate this automatically, but understand what it means rather than blindly trusting the number.

Check statistical power in addition to significance. Statistical power (typically 80%) represents your ability to detect a real difference if one exists. Low-power tests might fail to identify winners even when one variation truly performs better.

Look beyond the primary metric. While your main conversion goal should drive the decision, examine secondary metrics to ensure you’re not creating unintended negative consequences:

- Did one variation improve conversions but reduce average order value?

- Did more users convert but with worse engagement metrics?

- Did mobile performance suffer while desktop improved?

- Did conversion quality change based on lead scores or customer lifetime value?

Segment your results to understand how different user groups responded. You might discover that your variation won overall but performed poorly for your most valuable traffic sources. These insights help you make nuanced decisions about implementation.

Calculate the practical significance of your results. A test might be statistically significant but practically meaningless. If you spent two months testing to achieve a 0.1% conversion rate increase, the effort might not be worthwhile. Consider the absolute impact on your business metrics and the opportunity cost of the testing time.

Be honest about inconclusive results. Not every test produces a clear winner, and that’s okay. Inconclusive results where neither variation significantly outperforms the other are valuable learning opportunities. They tell you that particular change isn’t a major conversion driver for your audience.

Watch out for common statistical errors:

- Multiple comparison problems from testing too many variations simultaneously

- Early peeking bias from checking results repeatedly

- Novelty effects where the new variation temporarily performs better

- Sample ratio mismatch where traffic isn’t splitting evenly

Step 9: Implement Winners and Learn from Losers

Analysis complete and winner identified—now what? The implementation and learning phase is where testing delivers actual business value.

Roll out winning variations to all users promptly. The longer you delay, the more conversions you’re leaving on the table. Most testing platforms make this easy with a single click, but ensure the rollout happens cleanly:

- Remove testing code after implementing the winner permanently

- Verify the winning variation works correctly at full scale

- Update any related assets like mobile apps or email templates

- Document the change for future reference

Validate the lift over time. Monitor your conversion rate after implementation to confirm the improvement persists. Sometimes test results don’t hold up in the real world due to novelty effects or seasonal factors. Give it at least two to four weeks and compare performance to pre-test baselines.

Calculate the actual business impact of your winning test. Convert the percentage improvement into concrete numbers:

- Additional revenue generated

- Increased lead volume

- Cost per acquisition improvement

- Lifetime value impact

Quantifying results helps justify continued investment in optimization and demonstrates the value of your testing program.

Learn from losing variations as much as winning ones. A variation that decreased conversions tells you something important about your audience. Ask yourself:

- Why did this approach fail when the hypothesis seemed sound?

- What does this reveal about user preferences or psychology?

- Are there elements of the losing variation that could work in a different context?

- Does this result contradict conventional wisdom or industry best practices?

Share results across your organization. Testing insights shouldn’t live in a silo. Distribute learnings to:

- Design teams who can apply principles to new projects

- Copywriters who can adapt messaging strategies

- Product teams who can incorporate user preferences

- Leadership who need to understand customer behavior

Build a testing knowledge base that documents all tests, results, and learnings. Over time, this becomes an invaluable resource that prevents repeated tests and helps new team members understand what works for your specific audience.

Step 10: Iterate and Develop a Testing Roadmap

A single successful A/B test is great. A continuous optimization program that systematically improves your conversion rates over time is transformative. The final step is building a sustainable, long-term testing culture.

Develop a testing roadmap that plans out your next series of tests. Based on your research, prioritization, and previous results, outline:

- The next five to ten tests you plan to run

- The sequence and dependencies between tests

- Expected timelines and resource requirements

- Key questions you’re trying to answer

This roadmap keeps your optimization efforts strategic rather than reactive and helps secure ongoing resources and buy-in.

Build on previous winners with follow-up tests. If a more urgent headline improved conversions, test different types of urgency. If reducing form fields helped, test removing even more fields. Sequential testing compounds improvements over time.

Expand testing across your funnel. Once you’ve optimized your primary conversion page, move to other stages:

- Top-of-funnel pages that drive initial engagement

- Middle-funnel nurture sequences

- Post-conversion onboarding experiences

- Cross-sell and upsell opportunities

Retest periodically. User preferences and market conditions change. A test that lost two years ago might win today. Revisit major tests annually or when you have reason to believe conditions have shifted.

Invest in testing infrastructure and skills. As your program matures, consider:

- Training team members in statistics and experimental design

- Upgrading to more advanced testing platforms

- Developing custom testing capabilities

- Hiring specialists if testing becomes a major competitive advantage

Foster a testing culture where experimentation is encouraged and data-driven decision-making is the norm. Celebrate learning regardless of whether tests win or lose. Share failures as openly as successes to create psychological safety around taking calculated risks.

Set ongoing performance benchmarks. Track metrics like:

- Tests launched per quarter

- Percentage of tests reaching statistical significance

- Average lift from winning tests

- Cumulative conversion rate improvement

- Return on investment of your optimization program

These metrics help you continuously improve your testing practice itself.

Conclusion: From Testing to Transformation

A/B split testing is not a one-time activity or a quick fix for conversion problems. It’s a systematic discipline that, when practiced consistently with rigor and strategic thinking, can transform your marketing results. The difference between companies that see marginal benefits from testing and those that achieve dramatic conversion improvements comes down to following a proven methodology.

By establishing clear goals, conducting thorough research, prioritizing strategically, designing thoughtful variations, calculating proper sample sizes, implementing tests correctly, monitoring actively, analyzing rigorously, learning from results, and iterating continuously, you create a conversion optimization engine that compounds gains over time.

Remember that even small percentage improvements can have massive business impact at scale. A 10% conversion rate increase on a page generating $1 million in revenue translates to $100,000 in additional value. String together multiple winning tests across your funnel, and you’re looking at transformational business results.

Start with one well-executed test following these ten steps. Learn from the process. Refine your approach. Then do it again. Over time, this systematic approach to A/B testing will become your sustainable competitive advantage in an increasingly crowded digital marketplace.